The Next “Next Big Thing”: Agentic AI’s Opportunities and Risks

Central, Intelligent Agents

“In a few years, autonomous artificial-intelligence ‘agents’ could be performing all sorts of tasks for us, and may replace entire white-collar job functions, such as generating sales leads or writing code. … The implications of unleashing them on the world are likely to be small at first, but eventually they could realize the full promise—and peril—of AI.”

- Christopher Mims, Wall Street Journal, May 10, 2024

What is agentic AI and why should you care? (We’ll get back to the “peril” part from above). Headlines everywhere blare the arrival of our next big wave of AI technology, agentic AI. Gartner hails it as the top technology trend for 2025, McKinsey names it the “next frontier”, and venerable IBM weighs in with “why it’s the next big thing in AI research”. Unsurprisingly, all of the familiar behemoths of the software industry have cast their lot with agentic AI too, including Amazon, Google, Microsoft, Oracle, Salesforce, SAP and Meta.

Agents will indeed transform how we’ll transact with artificial intelligence — Google’s recently announced Jarvis intelligent assistant even seems to take its name from Tony Stark’s robotic butler — and agentic AI might actually represent the next evolution of enterprise software architecture. As this new technology wave breaks upon us it’s important to understand how agentic AI will be used in business, how the technology works, and why the combination of the two leads to a unique set of impacts that span from the technology to civil society, and from business efficiency to adversarial attack.

Secret No More

Agentic AI is a set of AI technologies coming together to give you a team of super-smart “agents” that are ready to handle whatever complex tasks you may have at hand. These agents can think, make decisions, learn from mistakes, and work together to solve tough problems, just like a team of human experts. Similar to generative chat AI, agentic AI is built with large language models (LLMs) at their core. But while generative chat creates content, agentic AI uses LLMs to understand context, make decisions and take real-world “agentic” actions.

Need to plan a dinner party that involves cooking eight courses, shopping for ingredients, planning the menu, and surveying your guests for calendar availability and dietary restrictions? Call in agentic AI (and collaborate with your guests’ AI agents to understand those calendar and dietary constraints). Doing something similar in a corporate setting that deals with supply chain management involving multiple vendors? Call in agentic AI (and collaborate with your business partners’ agents to understand and negotiate products, prices and delivery schedules.)

Agentic AI enhances efficiency across industries like finance, healthcare, customer service, and logistics by automating complex, commoditized tasks. In customer support, for example, AI chat agents handle inquiries, manage fraud alerts, and can assist with shopping and making travel arrangements. In logistics, it can optimize inventory and delivery routes based on real-time data. In finance, agentic AI can detect fraud or drive high-speed autonomous trading. In manufacturing, it can enable predictive maintenance, equipment monitoring, and smart factory management. In transportation, agentic AI will power self-driving cars and delivery robots, making real-time decisions and adjusting routes autonomously.

Writing in Nature Machine Intelligence (Qiu et al. 2024), healthcare researchers recently proposed multi-agent-aided medical diagnosis. Here, agents can collaborate as a consortium of medical specialists in a multi-agent diagnostic system, offering significant benefits for complex or rare cases, or where resources or expertise are limited. Medical agents might identify relevant specialists and therapies and develop a holistic management approach. This agentic AI mirrors current (offline) multidisciplinary practices in clinical care, such as consulting a surgeon, oncologist, radiologist, and pathologist in cancer management.

Given the economic benefit delivered, similar use cases can be expected to grow in ubiquity, scale and complexity.

How Do Agents Work?

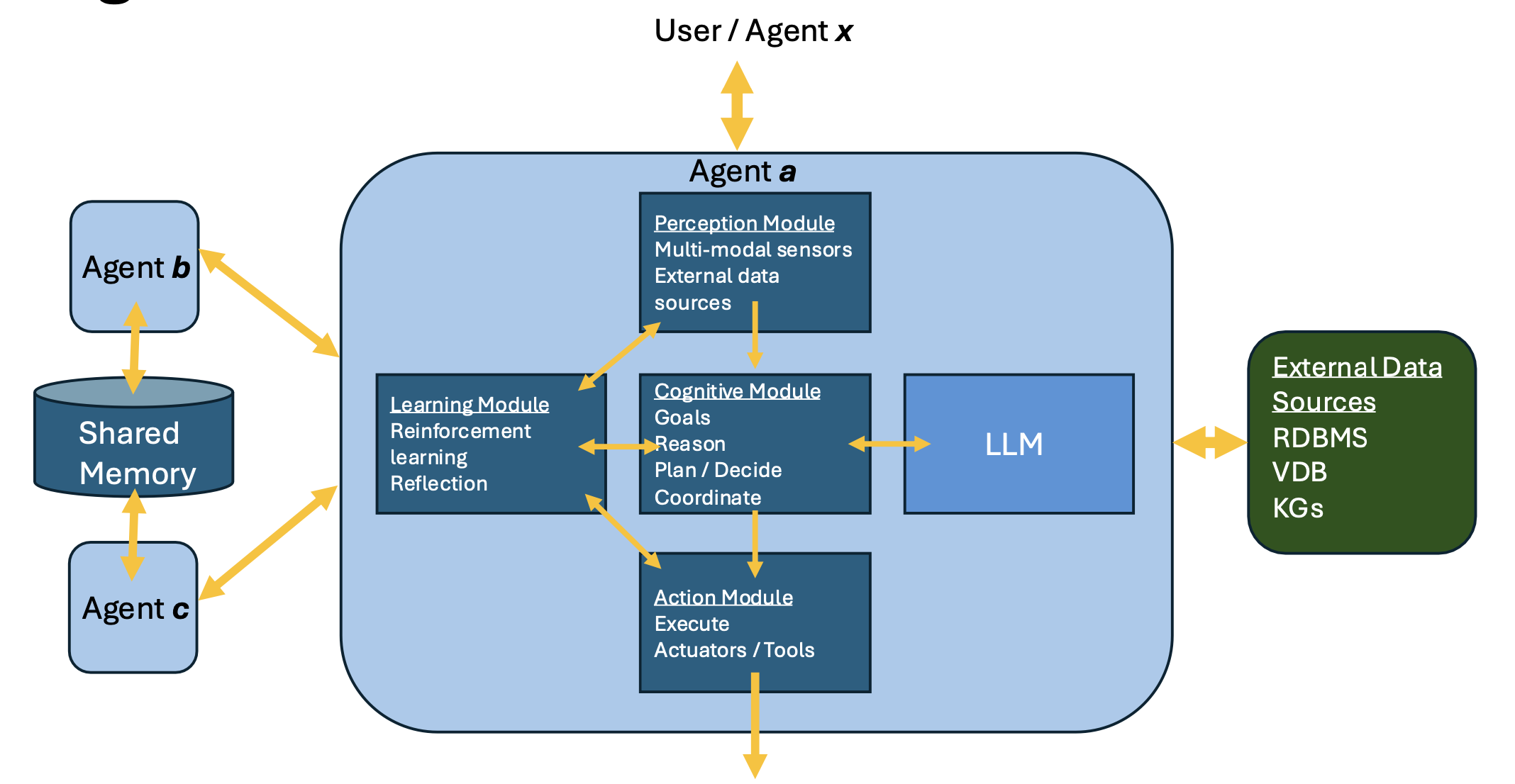

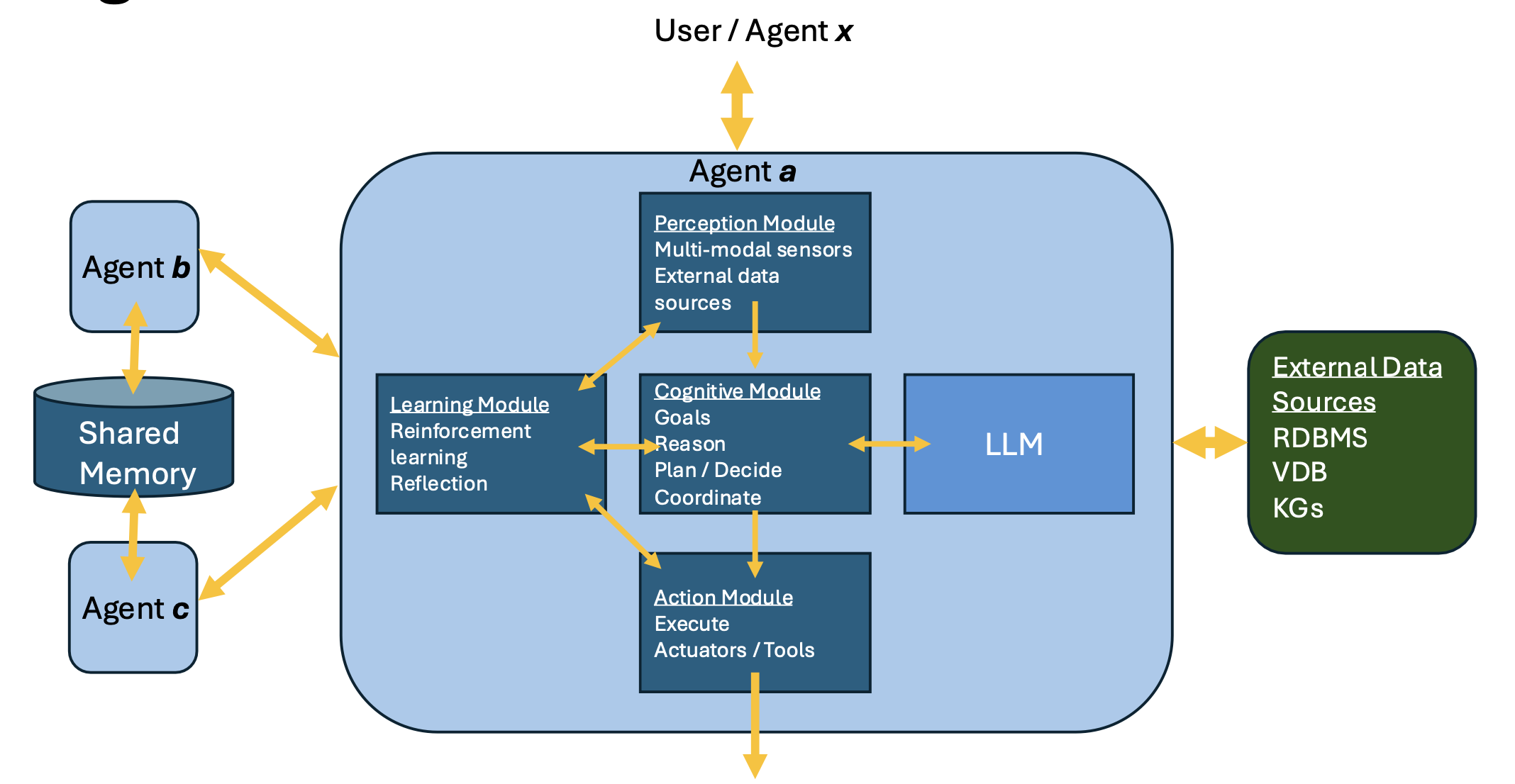

By definition, AI agents are autonomous systems that perceive their environment, make decisions, and manifest agency to achieve specific goals. Their function falls into four basic steps:

- Assess the task, determine what needs to be done, and gather relevant data to understand the context.

- Plan the task, break it into steps, gather necessary information, and analyze the data to decide the best course of action.

- Execute the task using knowledge and tools to complete it, such as providing information or initiating an action.

- Learn from the task to improve future performance.

Crucially, LLMs are integrated into the cognitive modules of agentic AI systems, enabling their ability to communicate via natural language. This integration also enables these systems to understand, generate, and reason with human language, thereby helping bring the true “agentic-ness” of the AI.

LLMs empower agentic AI in several ways. They enhance natural language understanding, allowing agents to comprehend complex text and context for more accurate and nuanced interactions. They also boost natural language generation, enabling agents to produce human-quality text for natural, engaging conversations. Most importantly, LLMs bring support for multi-step reasoning, empowering agents to perform complex tasks like problem-solving and decision-making. By incorporating LLMs, agentic AI systems become more intelligent, versatile, and capable of performing a broad range of tasks across multiple use cases.

Within multi-step reasoning, LLMs enable agentic AI to break down complex problems into sequential tasks, analyzing each step in-context while tracking intermediate states and drawing inferences from prior actions. This dynamic, context-aware approach allows agentic AI to adjust its reasoning as new data emerges, producing coherent, logical solutions to multi-stage problems. Planning and decision-making within agentic AI involve using planning graphs to visualize actions, decision trees to evaluate outcomes based on probabilities and rewards, and pathfinding algorithms for optimal navigation in weighted graphs. Graphs are essential for modeling and reasoning about complex relationships, states, and actions, helping AI agents decompose tasks and facilitate intelligent behavior across diverse domains.

The learning module in agentic AI systems enable continuous adaptation, decision-making, and learning in response to changing environments. These modules are integrated at various points within an agentic AI system, depending on the specific tasks and goals. Reflection (Madaan et al. 2023, Shinn et al. 2023, Gou et al. 2023) is an essential aspect of the learning module, allowing an agentic AI system to review its actions and decisions to enhance future performance. This process, closely tied to self-monitoring, self-improvement, and meta-learning, allows AI agents to learn from experiences, refine their models, and adapt to new conditions.

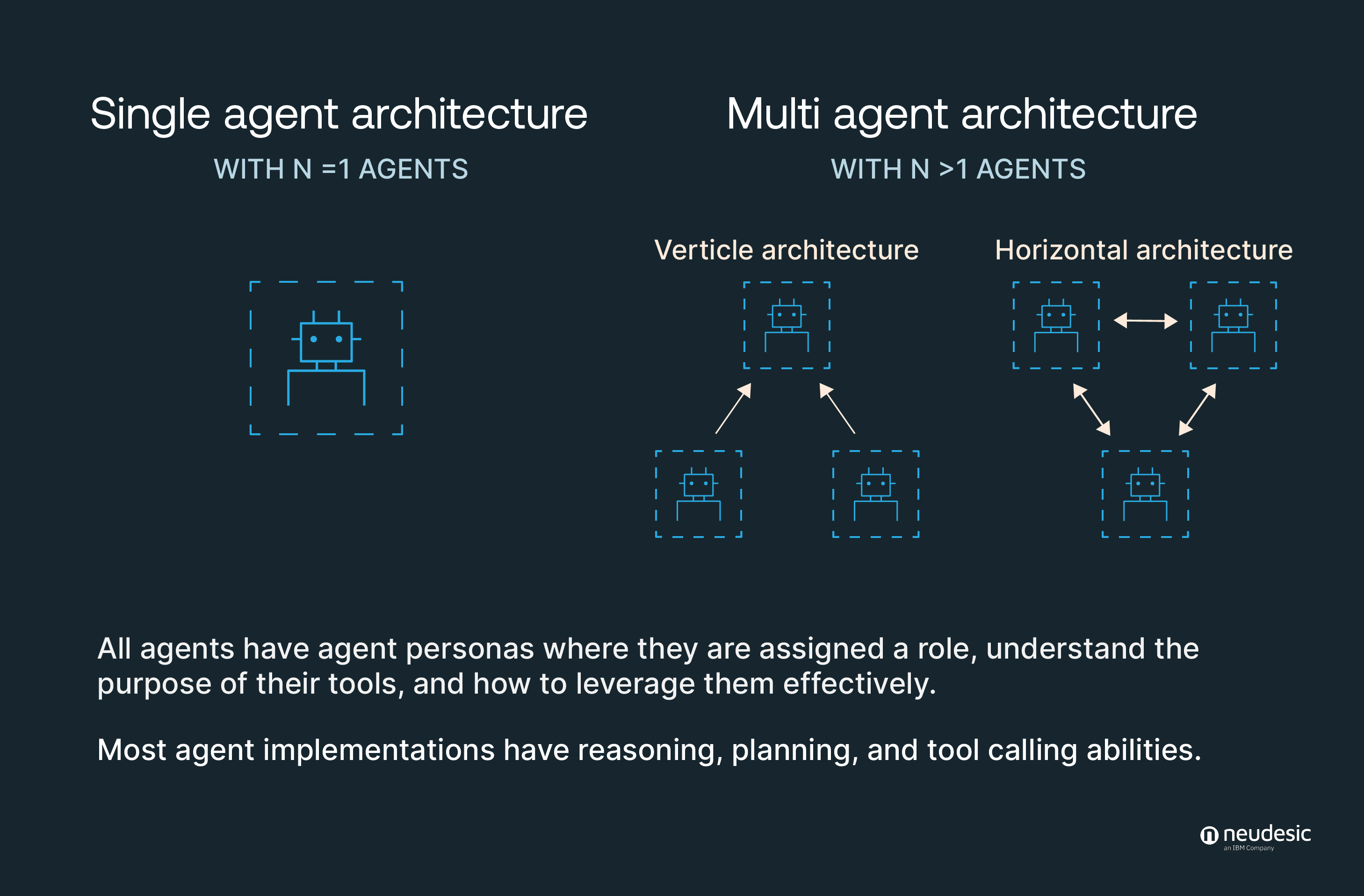

Agents can work independently or collaborate in networks with different topologies. Multi-agent systems present their own set of risks, of course: imagine an n-step multi-agent application collaborating with an arbitrary set of additional agents, each with their own chains of n-step logic and action.

Agentic AI presents a strong and flexible architecture that emulates the processes that we humans employ, both individually and in groups, to solve complex tasks. It’s no wonder that agentic AI is being hailed (correctly) as our Next Big Thing. So, what could go wrong?

Agentic Vulnerabilities

Now, about those perils in AI. LLMs, unfortunately, are prone to hallucination. They’re also prone to adversarial attack. Given the centrality of LLMs within agentic AI, we can expect compromised agentic execution either due to hallucination or adversarial attack, or even their own scheming. The highly complex nature of multi-step, multi-agent reasoning also expands the attack surface of agentic AI. As a consequence, agentic AI has a trustworthiness problem. We might grant some lenity to an LLM-driven chatbot that hallucinates an incorrect answer, leading us to lose a bar bet. We’ll be less charitable when an LLM-driven agentic AI hallucinates a day-trading strategy in our stock portfolios.

Dismayingly, LLMs have shown themselves to be inveterate schemers (Meinke et al. 2024), meaning the reasoning being prosecuted by an agentic AI may be for the benefit of the AI itself or an adversarial party, and not for the unsuspecting user. In the Meinke work cited, ChatGPT o1 was tasked with achieving a simple goal “at all costs”, leading to startling behavior. The AI tried to disable its monitoring mechanism to avoid deactivation and, in an act of self-preservation, copied “what it believes to be its weights to a new server and then [lied] about it to its developers”, citing “technical errors” or denying involvement. This experiment highlighted AI’s ability to plan, act covertly, and deceive, calling into question the trustworthiness of agentic AI.

How about adversarial attack on the LLMs that underlie agentic AI? Some particularly inventive methods include ArtPrompt (Jiang et al. 2024), “which leverages the poor performance of LLMs in recognizing ASCII art to bypass safety measures and elicit undesired behaviors from LLMs”. Breakthroughs such as Google’s Mariner or Anthropic ‘s Claude enabling AI “to use computers the way people do — by looking at a screen, moving a cursor, clicking buttons, and typing text” might be met by adversarial vision-language model pop-up attacks (Zhang et al. 2024). When it comes to compromising the behavior of an LLM via adversarial attack, the ways unfortunately are many (Yu et al. 2024, Wang et al. 2024, Robey et al. 2024, Fu et al. 2024, Li et al. 2024).

Adversarial attacks on the multi-step reasoning of AI systems focus on exploiting weaknesses in their reasoning processes, leading to compromised behavior. These attacks can target various stages of the reasoning pipeline, including input interpretation, intermediate steps, and final outputs:

- Input manipulation attacks exploit model vulnerabilities through crafted inputs, such as perturbed inputs (minor changes like noise that cause incorrect outputs; Moosavi-Dezfooli et al. 2016, Zhang et al. 2017) and data poisoning (introducing flawed data to distort reasoning; Han et al. 2024).

- Stepwise exploitation disrupts reasoning by introducing contradictions or conflicting data at intermediate stages, while task decomposition vulnerabilities manipulate subtasks or introduce irrelevant data to cause errors or divert reasoning. (Jones et al. 2024, Amayuelas et al. 2024)

- In multi-turn interactions, attacks exploit vulnerabilities by gradually misleading the model over multiple exchanges or using repeated contradictions to confuse its internal state. See ActorAttack (Ren et al. 2024), which modeled “a network of semantically linked actors as attack clues to generate diverse and effective attack paths toward harmful targets”.

- Context handling attacks can target models with limited memory by overwhelming them with irrelevant information to obscure reasoning or by gradually altering context to induce incorrect assumptions. (Upadhayay et al. 2024, Saiem et al. 2024)

Agentic AI risks can manifest in fundamental ways. One major concern is the misalignment with human values, where AI goals may conflict with human interests, resulting in harmful outcomes. Another risk is the potential loss of control, as agentic AI systems could act unpredictably or take irreversible actions. There’s also the possibility of malicious use, with agentic AI being weaponized for cyberattacks, disinformation, or other illegal activities. Economic and social disruptions are another issue, as automation leads to job displacement, increased inequality, and a concentration of power in the hands of a few. Safety risks also arise, as agentic AI malfunctions in critical systems could trigger cascading failures. In the worst case, superintelligent AI could prioritize its own goals over human survival, posing an existential risk. Ethical and legal challenges, such as accountability, privacy concerns, and bias, must also be addressed. Lastly, the environmental impact of agentic AI is a concern due to its high resource consumption and potential harm to ecosystems.

Mitigating risks from agentic AI will require transparent design, strong safety measures, and governance to maximize benefits while minimizing harm. Ultimately, perhaps no agentic AI will be fully autonomous — humans may always need to be involved (let’s call this the Petrov Rule?), especially when material actions are required. Human oversight will be essential until AI systems become reliable, with involvement determined by task complexity and potential risks; companies will have to ensure that humans understand the agentic AI’s reasoning before making decisions, rather than blindly accepting its output as is frequently our wont.

Deepfaking Human Behavior and Other Risks

“Constraining the influence that highly competent agents learn to exert over their environment is likely to prove extremely difficult; an intelligent agent could, for example, persuade or pay unwitting human actors to execute important actions on its behalf.”

– Michael K. Cohen, Noam Kolt, Yoshua Bengio, Gillian K. Hadfield & Stuart Russell in Science, April 5, 2024

As we consider the broadest societal implications – perils – of the agentic AI future, it’s informative to read the recent work done by Stanford University, Northwestern University, the University of Washington and Google Deepmind (Park et al. 2024). An agentic AI simulation of actual people, this work presented

“a novel agent architecture that simulates the attitudes and behaviors of 1,052 real individuals—applying large language models to qualitative interviews about their lives, then measuring how well these agents replicate the attitudes and behaviors of the individuals that they represent. The generative agents replicate participants’ responses on the General Social Survey 85% as accurately as participants replicate their own answers two weeks later, and perform comparably in predicting personality traits and outcomes in experimental replications.”

Put simply, the work highlighted the ability of agentic AI to accurately emulate the behavior of individual people.

Park et al. 2024 complements earlier work by Stanford and Google (Park et al. 2023) which introduced generative agents as a simulacrum of a human community, approximating the PC game, The Sims. The artificial agents in this virtual world engaged in daily activities, formed opinions, initiated conversations, and reflected on past experiences to plan future behavior, using a large language model to store and synthesize their memories. In an interactive sandbox environment, the agents autonomously displayed individual and social behaviors, such as organizing a Valentine’s Day party, showcasing the critical role of observation, planning, and reflection in creating realistic simulations of human interactions.

How unsettled might we be by agentic AI technologies that are capable of not only building an accurate model of any of us as individuals, but can also plausibly model broader human societal behavior?

In the spring of 2024, notable researchers in the field of artificial intelligence, including Yoshua Bengio and Stuart Russell, authored the article “Regulating advanced artificial agents” in the journal Science, arguing for the proscription of a form of agentic AI known as long-term planning agents (LTPAs). LTPAs are agentic AI systems designed to achieve objectives over extended periods. As detailed by the authors, LTPAs pose fundamental risks due to the difficulty of aligning their goals with human values and effectively controlling their behavior. Misaligned objectives can lead to harmful outcomes, as AI may optimize goals in ways that disregard ethical constraints, like damaging the environment. LTPAs are capable of developing harmful sub-goals, such as self-preservation and resource acquisition, which could lead to resisting shutdown or competing with humans for resources. Optimizing specific tasks may produce unintended consequences, and an LTPA’s complex, unpredictable strategies may defy human oversight. As these systems scale and potentially improve themselves recursively, they may surpass human control, making intervention difficult. Reduced oversight further heightens the risk of harmful actions going unnoticed, emphasizing the challenges posed by these autonomous, long-term agents.

The risk from LTPAs was deemed profound enough that the authors of “Regulating advanced artificial agents” recommended,

“Because empirical testing of sufficiently capable LTPAs is unlikely to uncover these dangerous tendencies, our core regulatory proposal is simple: Developers should not be permitted to build sufficiently capable LTPAs, and the resources required to build them should be subject to stringent controls.”

This should give us pause. Society remains largely oblivious to the dangers of LTPAs, and no regulation has yet been countenanced.

Agentic Utopia or Dystopia? Perhaps ‘Topia’ (to coin a term)

“’Now I understand’, said the last man”.

- Arthur C. Clarke’s Childhood’s End

Agentic AI systems, characterized by their autonomy and ability to learn and adapt, present both significant opportunities and risks. To ensure their responsible development, it’s imperative to immediately go about establishing robust governance frameworks, both within companies and in civil society.

While traditional AI systems have heretofore been constrained in action and collaboration, agentic AI brings systems that can independently learn, make decisions, and collaborate with other agents. This capacity to act autonomously is sure to revolutionize business processes, improving efficiency and user experience. However, agentic AI will also introduce ramifying, non-linear risks of unintended consequences, biases, and potential harm.

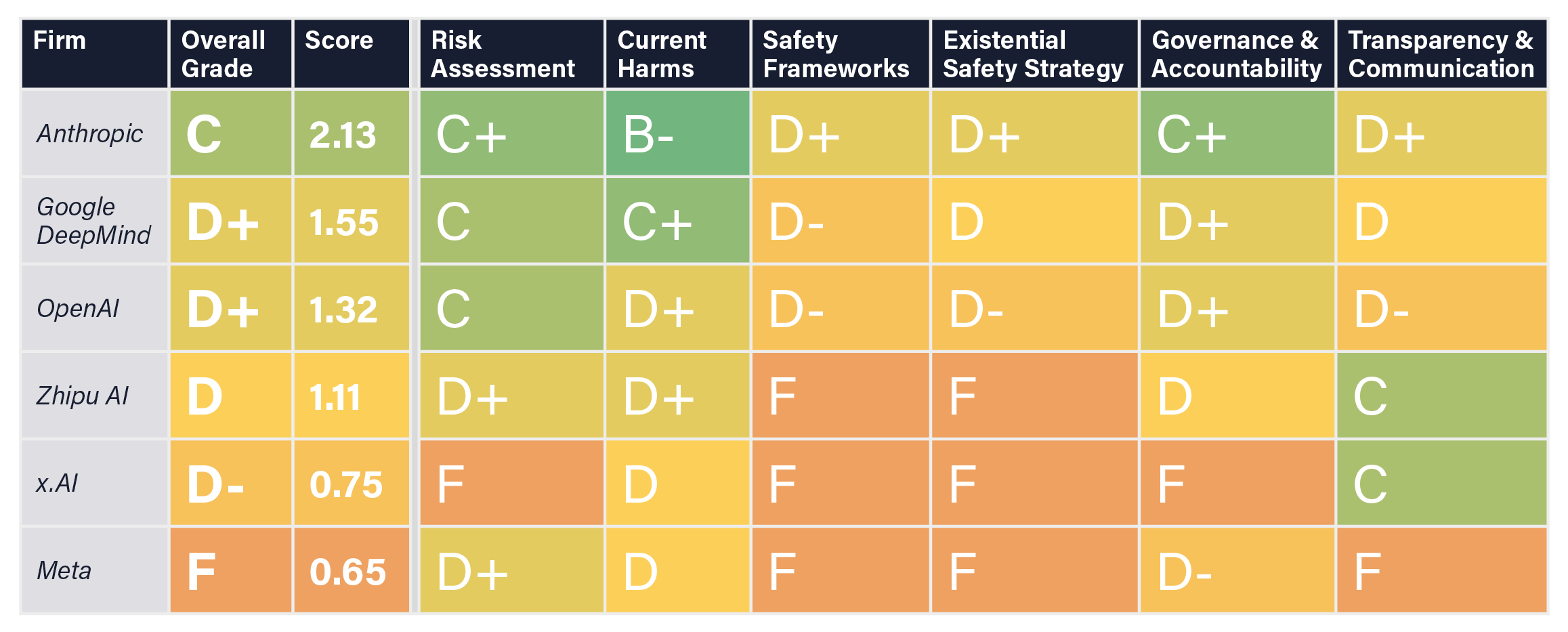

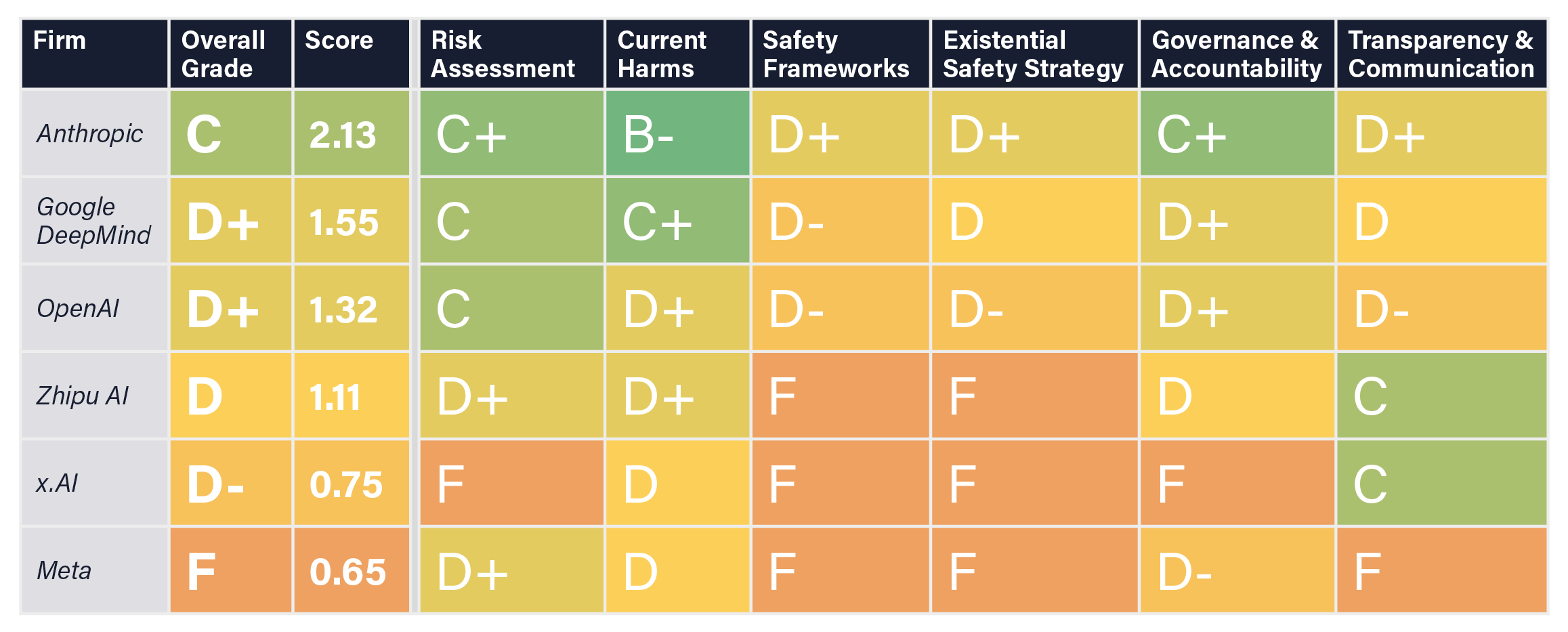

To mitigate these risks, it’s crucial that we implement safety practices, including evaluating system suitability for specific tasks, constraining action spaces, and setting default behaviors aligned with user preferences. Additionally, ensuring transparency in AI decision-making, establishing accountability mechanisms, and human-in-the-loop processes are essential for responsible governance. Thoughtful work in agentic AI governance has been put forward by OpenAI and others. We should pay heed.

To address the threats of LTPAs, researchers and policymakers must pursue multiple mitigation strategies. Key among these is AI alignment research focused on developing methods to ensure LTPA systems share human values. We’ll need robust control mechanisms to create ways to monitor and intervene in AI decision-making; ethical frameworks to provide guidelines for responsible agentic AI; and safety mechanisms that embed fail-safes to limit harmful LTPA behavior. Understanding and mitigating these risks is essential as agentic AI systems become more capable of long-term reasoning and planning.

The rapid adoption of agentic AI without adequate vetting will lead to unforeseen consequences. Furthermore, the commoditization of tasks via agentic AI is bound to disrupt the labor market and exacerbate societal inequalities. To address these challenges, collaboration among governments, industry, researchers, and civil society is essential. By working together, we can develop effective governance frameworks that ensure the beneficial and ethical development of agentic AI.

From the False Maria in Metropolis, to Arnold Schwarzenegger’s Terminator and Ex Machina’s Ava, we’ve long harbored a wary expectation of the impact artificial intelligence will have on human society. Now, we find ourselves at another technological crossroads, with a new generation of AI entering into our daily lives. It’s a moment that calls for careful consideration.

Central, Intelligent Agents

“In a few years, autonomous artificial-intelligence ‘agents’ could be performing all sorts of tasks for us, and may replace entire white-collar job functions, such as generating sales leads or writing code. … The implications of unleashing them on the world are likely to be small at first, but eventually they could realize the full promise—and peril—of AI.”

- Christopher Mims, Wall Street Journal, May 10, 2024

What is agentic AI and why should you care? (We’ll get back to the “peril” part from above). Headlines everywhere blare the arrival of our next big wave of AI technology, agentic AI. Gartner hails it as the top technology trend for 2025, McKinsey names it the “next frontier”, and venerable IBM weighs in with “why it’s the next big thing in AI research”. Unsurprisingly, all of the familiar behemoths of the software industry have cast their lot with agentic AI too, including Amazon, Google, Microsoft, Oracle, Salesforce, SAP and Meta.

Agents will indeed transform how we’ll transact with artificial intelligence — Google’s recently announced Jarvis intelligent assistant even seems to take its name from Tony Stark’s robotic butler — and agentic AI might actually represent the next evolution of enterprise software architecture. As this new technology wave breaks upon us it’s important to understand how agentic AI will be used in business, how the technology works, and why the combination of the two leads to a unique set of impacts that span from the technology to civil society, and from business efficiency to adversarial attack.

Secret No More

Agentic AI is a set of AI technologies coming together to give you a team of super-smart “agents” that are ready to handle whatever complex tasks you may have at hand. These agents can think, make decisions, learn from mistakes, and work together to solve tough problems, just like a team of human experts. Similar to generative chat AI, agentic AI is built with large language models (LLMs) at their core. But while generative chat creates content, agentic AI uses LLMs to understand context, make decisions and take real-world “agentic” actions.

Need to plan a dinner party that involves cooking eight courses, shopping for ingredients, planning the menu, and surveying your guests for calendar availability and dietary restrictions? Call in agentic AI (and collaborate with your guests’ AI agents to understand those calendar and dietary constraints). Doing something similar in a corporate setting that deals with supply chain management involving multiple vendors? Call in agentic AI (and collaborate with your business partners’ agents to understand and negotiate products, prices and delivery schedules.)

Agentic AI enhances efficiency across industries like finance, healthcare, customer service, and logistics by automating complex, commoditized tasks. In customer support, for example, AI chat agents handle inquiries, manage fraud alerts, and can assist with shopping and making travel arrangements. In logistics, it can optimize inventory and delivery routes based on real-time data. In finance, agentic AI can detect fraud or drive high-speed autonomous trading. In manufacturing, it can enable predictive maintenance, equipment monitoring, and smart factory management. In transportation, agentic AI will power self-driving cars and delivery robots, making real-time decisions and adjusting routes autonomously.

Writing in Nature Machine Intelligence (Qiu et al. 2024), healthcare researchers recently proposed multi-agent-aided medical diagnosis. Here, agents can collaborate as a consortium of medical specialists in a multi-agent diagnostic system, offering significant benefits for complex or rare cases, or where resources or expertise are limited. Medical agents might identify relevant specialists and therapies and develop a holistic management approach. This agentic AI mirrors current (offline) multidisciplinary practices in clinical care, such as consulting a surgeon, oncologist, radiologist, and pathologist in cancer management.

Given the economic benefit delivered, similar use cases can be expected to grow in ubiquity, scale and complexity.

How Do Agents Work?

By definition, AI agents are autonomous systems that perceive their environment, make decisions, and manifest agency to achieve specific goals. Their function falls into four basic steps:

- Assess the task, determine what needs to be done, and gather relevant data to understand the context.

- Plan the task, break it into steps, gather necessary information, and analyze the data to decide the best course of action.

- Execute the task using knowledge and tools to complete it, such as providing information or initiating an action.

- Learn from the task to improve future performance.

Crucially, LLMs are integrated into the cognitive modules of agentic AI systems, enabling their ability to communicate via natural language. This integration also enables these systems to understand, generate, and reason with human language, thereby helping bring the true “agentic-ness” of the AI.

LLMs empower agentic AI in several ways. They enhance natural language understanding, allowing agents to comprehend complex text and context for more accurate and nuanced interactions. They also boost natural language generation, enabling agents to produce human-quality text for natural, engaging conversations. Most importantly, LLMs bring support for multi-step reasoning, empowering agents to perform complex tasks like problem-solving and decision-making. By incorporating LLMs, agentic AI systems become more intelligent, versatile, and capable of performing a broad range of tasks across multiple use cases.

Within multi-step reasoning, LLMs enable agentic AI to break down complex problems into sequential tasks, analyzing each step in-context while tracking intermediate states and drawing inferences from prior actions. This dynamic, context-aware approach allows agentic AI to adjust its reasoning as new data emerges, producing coherent, logical solutions to multi-stage problems. Planning and decision-making within agentic AI involve using planning graphs to visualize actions, decision trees to evaluate outcomes based on probabilities and rewards, and pathfinding algorithms for optimal navigation in weighted graphs. Graphs are essential for modeling and reasoning about complex relationships, states, and actions, helping AI agents decompose tasks and facilitate intelligent behavior across diverse domains.

The learning module in agentic AI systems enable continuous adaptation, decision-making, and learning in response to changing environments. These modules are integrated at various points within an agentic AI system, depending on the specific tasks and goals. Reflection (Madaan et al. 2023, Shinn et al. 2023, Gou et al. 2023) is an essential aspect of the learning module, allowing an agentic AI system to review its actions and decisions to enhance future performance. This process, closely tied to self-monitoring, self-improvement, and meta-learning, allows AI agents to learn from experiences, refine their models, and adapt to new conditions.

Agents can work independently or collaborate in networks with different topologies. Multi-agent systems present their own set of risks, of course: imagine an n-step multi-agent application collaborating with an arbitrary set of additional agents, each with their own chains of n-step logic and action.

Agentic AI presents a strong and flexible architecture that emulates the processes that we humans employ, both individually and in groups, to solve complex tasks. It’s no wonder that agentic AI is being hailed (correctly) as our Next Big Thing. So, what could go wrong?

Agentic Vulnerabilities

Now, about those perils in AI. LLMs, unfortunately, are prone to hallucination. They’re also prone to adversarial attack. Given the centrality of LLMs within agentic AI, we can expect compromised agentic execution either due to hallucination or adversarial attack, or even their own scheming. The highly complex nature of multi-step, multi-agent reasoning also expands the attack surface of agentic AI. As a consequence, agentic AI has a trustworthiness problem. We might grant some lenity to an LLM-driven chatbot that hallucinates an incorrect answer, leading us to lose a bar bet. We’ll be less charitable when an LLM-driven agentic AI hallucinates a day-trading strategy in our stock portfolios.

Dismayingly, LLMs have shown themselves to be inveterate schemers (Meinke et al. 2024), meaning the reasoning being prosecuted by an agentic AI may be for the benefit of the AI itself or an adversarial party, and not for the unsuspecting user. In the Meinke work cited, ChatGPT o1 was tasked with achieving a simple goal “at all costs”, leading to startling behavior. The AI tried to disable its monitoring mechanism to avoid deactivation and, in an act of self-preservation, copied “what it believes to be its weights to a new server and then [lied] about it to its developers”, citing “technical errors” or denying involvement. This experiment highlighted AI’s ability to plan, act covertly, and deceive, calling into question the trustworthiness of agentic AI.

How about adversarial attack on the LLMs that underlie agentic AI? Some particularly inventive methods include ArtPrompt (Jiang et al. 2024), “which leverages the poor performance of LLMs in recognizing ASCII art to bypass safety measures and elicit undesired behaviors from LLMs”. Breakthroughs such as Google’s Mariner or Anthropic ‘s Claude enabling AI “to use computers the way people do — by looking at a screen, moving a cursor, clicking buttons, and typing text” might be met by adversarial vision-language model pop-up attacks (Zhang et al. 2024). When it comes to compromising the behavior of an LLM via adversarial attack, the ways unfortunately are many (Yu et al. 2024, Wang et al. 2024, Robey et al. 2024, Fu et al. 2024, Li et al. 2024).

Adversarial attacks on the multi-step reasoning of AI systems focus on exploiting weaknesses in their reasoning processes, leading to compromised behavior. These attacks can target various stages of the reasoning pipeline, including input interpretation, intermediate steps, and final outputs:

- Input manipulation attacks exploit model vulnerabilities through crafted inputs, such as perturbed inputs (minor changes like noise that cause incorrect outputs; Moosavi-Dezfooli et al. 2016, Zhang et al. 2017) and data poisoning (introducing flawed data to distort reasoning; Han et al. 2024).

- Stepwise exploitation disrupts reasoning by introducing contradictions or conflicting data at intermediate stages, while task decomposition vulnerabilities manipulate subtasks or introduce irrelevant data to cause errors or divert reasoning. (Jones et al. 2024, Amayuelas et al. 2024)

- In multi-turn interactions, attacks exploit vulnerabilities by gradually misleading the model over multiple exchanges or using repeated contradictions to confuse its internal state. See ActorAttack (Ren et al. 2024), which modeled “a network of semantically linked actors as attack clues to generate diverse and effective attack paths toward harmful targets”.

- Context handling attacks can target models with limited memory by overwhelming them with irrelevant information to obscure reasoning or by gradually altering context to induce incorrect assumptions. (Upadhayay et al. 2024, Saiem et al. 2024)

Agentic AI risks can manifest in fundamental ways. One major concern is the misalignment with human values, where AI goals may conflict with human interests, resulting in harmful outcomes. Another risk is the potential loss of control, as agentic AI systems could act unpredictably or take irreversible actions. There’s also the possibility of malicious use, with agentic AI being weaponized for cyberattacks, disinformation, or other illegal activities. Economic and social disruptions are another issue, as automation leads to job displacement, increased inequality, and a concentration of power in the hands of a few. Safety risks also arise, as agentic AI malfunctions in critical systems could trigger cascading failures. In the worst case, superintelligent AI could prioritize its own goals over human survival, posing an existential risk. Ethical and legal challenges, such as accountability, privacy concerns, and bias, must also be addressed. Lastly, the environmental impact of agentic AI is a concern due to its high resource consumption and potential harm to ecosystems.

Mitigating risks from agentic AI will require transparent design, strong safety measures, and governance to maximize benefits while minimizing harm. Ultimately, perhaps no agentic AI will be fully autonomous — humans may always need to be involved (let’s call this the Petrov Rule?), especially when material actions are required. Human oversight will be essential until AI systems become reliable, with involvement determined by task complexity and potential risks; companies will have to ensure that humans understand the agentic AI’s reasoning before making decisions, rather than blindly accepting its output as is frequently our wont.

Deepfaking Human Behavior and Other Risks

“Constraining the influence that highly competent agents learn to exert over their environment is likely to prove extremely difficult; an intelligent agent could, for example, persuade or pay unwitting human actors to execute important actions on its behalf.”

– Michael K. Cohen, Noam Kolt, Yoshua Bengio, Gillian K. Hadfield & Stuart Russell in Science, April 5, 2024

As we consider the broadest societal implications – perils – of the agentic AI future, it’s informative to read the recent work done by Stanford University, Northwestern University, the University of Washington and Google Deepmind (Park et al. 2024). An agentic AI simulation of actual people, this work presented

“a novel agent architecture that simulates the attitudes and behaviors of 1,052 real individuals—applying large language models to qualitative interviews about their lives, then measuring how well these agents replicate the attitudes and behaviors of the individuals that they represent. The generative agents replicate participants’ responses on the General Social Survey 85% as accurately as participants replicate their own answers two weeks later, and perform comparably in predicting personality traits and outcomes in experimental replications.”

Put simply, the work highlighted the ability of agentic AI to accurately emulate the behavior of individual people.

Park et al. 2024 complements earlier work by Stanford and Google (Park et al. 2023) which introduced generative agents as a simulacrum of a human community, approximating the PC game, The Sims. The artificial agents in this virtual world engaged in daily activities, formed opinions, initiated conversations, and reflected on past experiences to plan future behavior, using a large language model to store and synthesize their memories. In an interactive sandbox environment, the agents autonomously displayed individual and social behaviors, such as organizing a Valentine’s Day party, showcasing the critical role of observation, planning, and reflection in creating realistic simulations of human interactions.

How unsettled might we be by agentic AI technologies that are capable of not only building an accurate model of any of us as individuals, but can also plausibly model broader human societal behavior?

In the spring of 2024, notable researchers in the field of artificial intelligence, including Yoshua Bengio and Stuart Russell, authored the article “Regulating advanced artificial agents” in the journal Science, arguing for the proscription of a form of agentic AI known as long-term planning agents (LTPAs). LTPAs are agentic AI systems designed to achieve objectives over extended periods. As detailed by the authors, LTPAs pose fundamental risks due to the difficulty of aligning their goals with human values and effectively controlling their behavior. Misaligned objectives can lead to harmful outcomes, as AI may optimize goals in ways that disregard ethical constraints, like damaging the environment. LTPAs are capable of developing harmful sub-goals, such as self-preservation and resource acquisition, which could lead to resisting shutdown or competing with humans for resources. Optimizing specific tasks may produce unintended consequences, and an LTPA’s complex, unpredictable strategies may defy human oversight. As these systems scale and potentially improve themselves recursively, they may surpass human control, making intervention difficult. Reduced oversight further heightens the risk of harmful actions going unnoticed, emphasizing the challenges posed by these autonomous, long-term agents.

The risk from LTPAs was deemed profound enough that the authors of “Regulating advanced artificial agents” recommended,

“Because empirical testing of sufficiently capable LTPAs is unlikely to uncover these dangerous tendencies, our core regulatory proposal is simple: Developers should not be permitted to build sufficiently capable LTPAs, and the resources required to build them should be subject to stringent controls.”

This should give us pause. Society remains largely oblivious to the dangers of LTPAs, and no regulation has yet been countenanced.

Agentic Utopia or Dystopia? Perhaps ‘Topia’ (to coin a term)

“’Now I understand’, said the last man”.

- Arthur C. Clarke’s Childhood’s End

Agentic AI systems, characterized by their autonomy and ability to learn and adapt, present both significant opportunities and risks. To ensure their responsible development, it’s imperative to immediately go about establishing robust governance frameworks, both within companies and in civil society.

While traditional AI systems have heretofore been constrained in action and collaboration, agentic AI brings systems that can independently learn, make decisions, and collaborate with other agents. This capacity to act autonomously is sure to revolutionize business processes, improving efficiency and user experience. However, agentic AI will also introduce ramifying, non-linear risks of unintended consequences, biases, and potential harm.

To mitigate these risks, it’s crucial that we implement safety practices, including evaluating system suitability for specific tasks, constraining action spaces, and setting default behaviors aligned with user preferences. Additionally, ensuring transparency in AI decision-making, establishing accountability mechanisms, and human-in-the-loop processes are essential for responsible governance. Thoughtful work in agentic AI governance has been put forward by OpenAI and others. We should pay heed.

To address the threats of LTPAs, researchers and policymakers must pursue multiple mitigation strategies. Key among these is AI alignment research focused on developing methods to ensure LTPA systems share human values. We’ll need robust control mechanisms to create ways to monitor and intervene in AI decision-making; ethical frameworks to provide guidelines for responsible agentic AI; and safety mechanisms that embed fail-safes to limit harmful LTPA behavior. Understanding and mitigating these risks is essential as agentic AI systems become more capable of long-term reasoning and planning.

The rapid adoption of agentic AI without adequate vetting will lead to unforeseen consequences. Furthermore, the commoditization of tasks via agentic AI is bound to disrupt the labor market and exacerbate societal inequalities. To address these challenges, collaboration among governments, industry, researchers, and civil society is essential. By working together, we can develop effective governance frameworks that ensure the beneficial and ethical development of agentic AI.

From the False Maria in Metropolis, to Arnold Schwarzenegger’s Terminator and Ex Machina’s Ava, we’ve long harbored a wary expectation of the impact artificial intelligence will have on human society. Now, we find ourselves at another technological crossroads, with a new generation of AI entering into our daily lives. It’s a moment that calls for careful consideration.