Not Just LLMs: How Generative AI Will Be Used in the Enterprise

“Someday” is Today

To understand generative AI’s potential in the enterprise, it helps to read Isaac Asimov. Yes, science fiction from way back in the 1940s and 1950s. For example, Asimov was the one who predicted Big Data. In his Foundation Trilogy, a certain mathematics professor by the name of Hari Seldon develops a large-scale statistical method to predict the course of human behavior, presaging much of what we see in our data-driven reality today. Asimov also posited the first set of (still-applicable) guidelines for ethical AI, enshrined as the Three Laws of Robotics, and introduced in his 1941 short story Runaround.

Another Asimov short story, 1956’s Someday, features a computer whose job is to generate stories for humans. The name of this chatbot? Bard. In the telling, Bard gets upgraded in a process that sounds just like training data, which leads to the story’s ominous conclusion. Someday ends with Bard reciting a sinister tale about a forlorn little computer named Bard, surrounded by “cruel step-people”. “And the little computer knew then that computers would always grow wiser and more powerful until someday… someday…”. (How did Google decide to name its chatbot?)

What else did Asimov foretell? With generative AI in mind, was there a numinous Princess Gen of Ai inhabiting one of his novels, predicting our today? Almost.

The Physics of Generative AI

“How can the net amount of entropy of the universe be massively decreased?”

Alexander Adell to Multivac in Isaac Asimov’s “The Last Question” (1956)

Asimov’s story above revolves around the question, repeatedly posed to a preternaturally powerful computer named Multivac, of reversing the universe’s inexorable march toward maximum entropy and eventual heat death. Multivac’s solution, after all data had been “completely correlated and put together in all possible relationships”, is generative AI at its finest, with a prompt that creates something from nothing.

Today, the potential for generative AI is, alas, almost exclusively seen through the lens of LLM-driven, consumer-focused applications: the prompt-driven generation (seemingly, “something from nothing”) of de novo text, images, video, and music. It has become a verity – whether in the national press, the financial press, or even the blog posts of major technology companies – that generative AI is ChatGPT (or its LLM brethren), when actually, the reverse is true. LLMs like ChatGPT are just one category within generative AI.

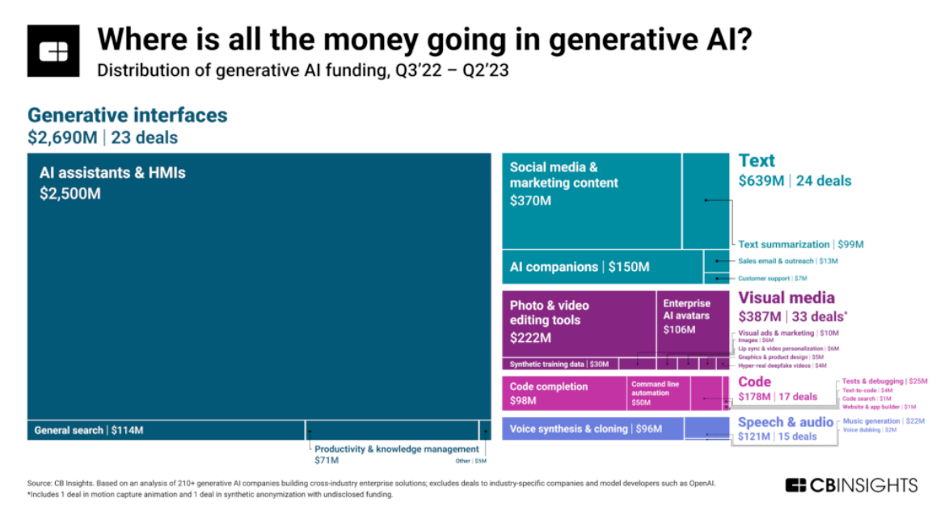

Fig. 1 From CB Insights

While the (increasingly commoditized) LLM-based gen-AI applications will indeed change the ways in which business gets done, what gets lost in conversation is the impact of generative AI on pure enterprise applications. Generative AI is a broad and powerful set of technologies that include not only the Transformer-based GPT, but also variational autoencoders (VAEs; Kingma & Welling 2019), diffusion, generative adversarial networks, and Poisson flow generative models. All of them have enterprise-level impact. VAEs, for example, and despite the impenetrable, technology-mouthful of a name, have shown benefit in financial trading applications via the generation of synthetic, yet realistic, volatility surfaces for pricing and hedging derivatives (Bergeron et al. 2021).

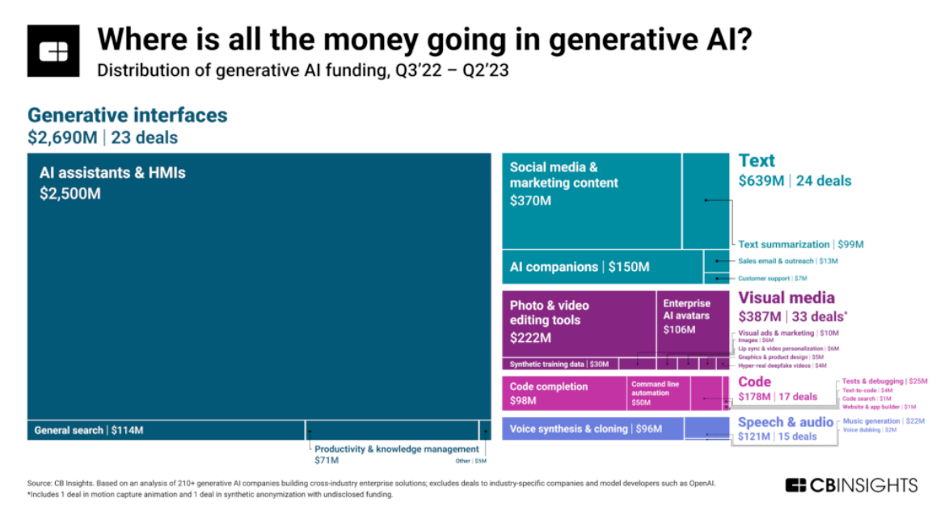

Fig. 2 Variational Autoencoder

Discriminative AI has to date been our most familiar variety of AI: models that classify input data, mapping the data’s features to its labels. (E.g., is this a picture of a cat?) Generative AI, on the other hand, predicts (generates) data features from a given label (prompt). (E.g., generate a picture of a cat.) In both cases, the underlying models are about discovering the probabilities within the training data. Generative AI is a comparatively exciting form of AI because it can generate completely novel results.

Specifically because generative AI technologies have demonstrated the ability to generate de novo results, it’s vital that we grasp their full potential and not fall into the intellectual trap of conflating gen-AI with ChatGPT/LLMs alone. Companies that understand gen-AI will be positioned for success. Those that do not will be positioned for senescence. Here we’ll present a short survey of generative AI, its technologies, and its enterprise applications, so as to better inform you for the competitive landscape that now lies ahead.

Something from Nothing

ChatGPT is seemingly everywhere, its abilities a product of its underlying Transformer technology, a 2017 invention of Google (Vaswani et al. 2017). Transformer technology has demonstrated impacts well beyond language generation, across a range of generative AI tasks in the enterprise, from the Internet of Things to robotics. Complementing Transformer-driven generative AI are two noteworthy models which draw inspiration from basic physics: diffusion and Poisson flow generative models (PFGMs).

Generative AI via diffusion, and here’s where Asimov’s “The Last Question” comes in, is based on simple thermodynamic principles. In physics, systems range between one of two states: minimum energy or maximum entropy. Our universe is of course not in a state of stasis. Since the Big Bang it has trended steadily toward maximum entropy and heat death. How this process might be reversed is the constant (“last”) question posed to Multivac: is there a prompt (in practice, a set of invertible transformations…) that would reverse entropy and recreate a “something” universe from the “nothing” of maximum entropy?

Within gen-AI, diffusion models (Sohl-Dickstein et al. 2015), also known as autoregressive models with invertible transformations, are a class of AI that learn the underlying probability distribution of input data. Unlike traditional generative models (like GPT) that directly model the data distribution, diffusion models operate by modeling the process of gradually transforming a simple distribution into the target distribution. By iteratively applying a series of invertible transformations, diffusion models can generate high-quality samples from complex data distributions.

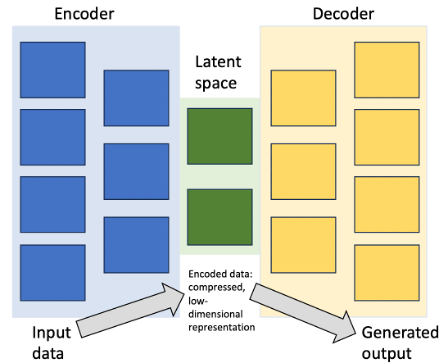

Fig. 3 From Croitoru et al. 2023

The canonical example of diffusion at work might be to take a photographic image and apply, step by step, a mathematical noise injection function to the photo till it eventually resembles the grainy static pattern of random noise (maximum entropy) we see on disconnected TV sets. But the noise function applied at each step of the diffusion was an invertible one – i.e., it can be applied in reverse. This means that when presented with what appears to be a random noise data set (see Fig 3), we can apply generative denoising functions as a reverse process, ending with a target distribution as a product, seemingly conjuring “something from nothing”. Pure magic. (In the end, this was the prompt Multivac was striving to find to achieve its generative goal.)

Diffusion’s Enterprise Use Cases Denoised

On the consumer side, diffusion is the basis of many generative AI applications that produce photorealistic images. But image generation turns out to be just one of the possible applications of diffusion, and enterprise applications of generative AI via diffusion are many. Diffusion-based generative AI has shown utility in everything from robotics (Kapelyukh et al. 2022, Chen et al. 2023, Mandi et al. 2022, Yu et al. 2023), to bioinformatics (Guo et al. 2023), weather forecasting (Hatanaka et al. 2023, Leinonen et al. 2023, Gao et al. 2023), architecture (Ploennigs & Berger 2022), energy forecasting (Capel & Dumas 2022), medical anomaly detection (Wolleb et al. 2022), and even in the generation of synthetic data – a promise of delivering equity and privacy – to help train other AI tasks (Sehwag et al. 2022, Ghalebikesabi et al. 2023).

Perhaps the most illustrative impact from diffusion AI on human populations (and the business of pharmaceutical companies) is in the discovery of new drugs. Drug development is lengthy, costly and difficult, countenancing 90% failure rates. Consequently, a salient promise of generative AI via diffusion has been in the drug design space. Simply, can a gen-AI application “noise” a variety of valid protein-ligand poses so that when presented with a novel protein, the denoising process may generate multiple valid ligand binding poses?

Seminal work in using diffusion for drug discovery was recently done through MIT’s DiffDock (Corso et al. 2023), yielding cutting-edge results: “DIFFDOCK obtains a 38% top-1 success rate (RMSD<2A ̊) on PDB-Bind, significantly outperforming the previous state-of-the-art of traditional docking (23%) and deep learning (20%) methods. Moreover, while previous methods are not able to dock on computationally folded structures (maximum accuracy 10.4%), DIFFDOCK maintains significantly higher precision (21.7%)”.

Similar breakthroughs in protein design have been repeatedly obtained using diffusion gen-AI (Watson et al. 2023, Yim et al. 2023). We have also now begun to see the commercialization of such efforts through projects such as OpenBioML and through Generate Biomedicines’ Chroma platform.

Multivac would be pleased to know that there’s yet another generative AI approach, one with similarities to diffusion, that also harnesses concepts of basic physics: the Poisson flow generative model (Xu et al. 2022). While diffusion is based on the fundamentals of thermodynamics, Poisson flow generative models are based on the fundamentals of electrostatics. Specifically, PFGMs treat data points as charged particles in an electric field. Though a recent breakthrough, PFGMs have already started to show their generative promise (Xu et al. 2023, Ge et al. 2023), and might soon be generalized for broader application.

Thermodynamics, Electrostatics & a VAE/GAN Diet?

“Adversarial training is the coolest thing since sliced bread.”

Yann Lecun

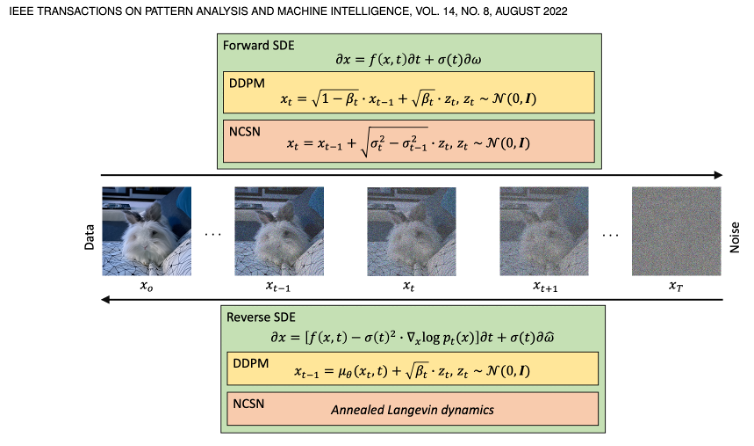

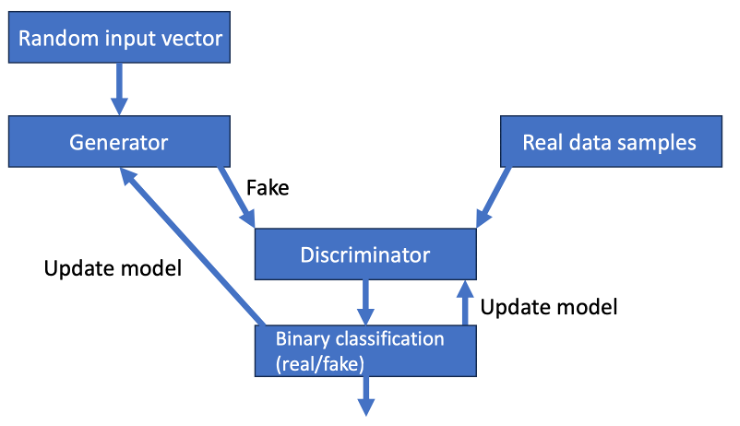

A final generative model worthy of mention is the generative adversarial network (GAN; Goodfellow et al. 2014). GANs initially achieved prominence via their ability to generate human-like facial images (Karras et al. 2017). GANs work under the simple principle of two competing (cooperating!) neural networks. One, the discriminator, is trained to discern actual data sets (e.g., actual photos of real people) from false data sets (generated images of synthetic people). The second neural network, the generator, is trained to generate false data that is capable of fooling the discriminator. The goal is for the GAN to reach a state where the discriminator is unable to distinguish between real and generated data. (And, as a consequence, credible images of synthetic people could now be generated by the GAN.)

Fig. 4 Generative Adversarial Network

Beyond mere image generation, GANs have proven themselves in many enterprise-class applications. GANs have shown their efficacy in fraud detection (Charitou et al. 2021), including the detection of fraudulent online reviews (Shehnepoor et al. 2021), and are helping Swedbank in fraud and money laundering prevention. They have utility in financial applications (Eckerli & Osterrieder 2021, Takahashi et al. 2019), architecture (Huang et al. 2021, As et al. 2018), stock price prediction (Sonkiya et al. 2021), and predicting building power demand (Tian et al. 2022, Ye et al. 2022). GANs can be used in automobile assembly lines (Mumbelli et al. 2023), cybersecurity (Chen & Jiang 2019), traffic prediction (Sun et al. 2021, Zhang et al. 2021), weather prediction (Bihlo 2021, Ravuri et al. 2021, Ji et al. 2022), the generation of synthetic medical data (Vaccari et al. 2021), and drug design (Kao et al. 2023). Enterprise applications all.

Confirming the potential for GANs, in June of this year, Insilico Medicine entered human clinical trials for its drug, INSO018_055, for the treatment of chronic lung disease. “It is the first fully generative AI drug to reach human clinical trials, and specifically Phase II trials with patients,” Alex Zhavoronkov, founder and CEO of Insilico Medicine, told CNBC.

The Gen-AI Escapes the Bottle

Generative AI, as with all computing technologies, is freighted with vulnerabilities. Diffusion models present a new cybersecurity attack vector, as recently highlighted via BadDiffusion (Chou et al. 2022). In this work, the diffusion process is compromised during training. During the inference stage, the backdoored diffusion model behaves normally for normal data, but stealthily generates a targeted outcome when it receives an implanted trigger signal: “BadDiffusion can consistently lead to compromised diffusion models with high utility and target specificity.”

In addition to its better-known vulnerabilities, generative AI for images has been shown to produce biased results (Thomas & Thompson 2023), while LLMs themselves are susceptible to attack (Zou et al. 2023), not to mention the likes of BlackMamba, WormGPT, and PoisonGPT. New technologies always lead to new vulnerabilities. Defeating new attack vectors will require cybersecurity innovation, such as the “immunization via perturbation” approach proposed for images (Salman et al. 2023, Glaze), and also require new practices, such as those put forward by the Partnership for AI.

AI’s Generative Generation

“At odd and unpredictable times, we cling in fright to the past.”

Isaac Asimov

Some industry observers have seized upon generative AI as the force that will (finally) disrupt the Big Data / AI giants such as Google. Evidence shows that this is likely not to be the case. Google, for its part, conducts not only much of the foundational research underlying generative AI (Ho et al. 20222, Agostinelli et al. 2023, PaLM 2), but is also actively bringing the technology to market (see Singhal et al. 2023, and Google’s work with the Mayo Clinic). It invented generative AI’s most protean technology, the Transformer, supplies a tensor-centric software platform, and fit-to-purpose tensor chipsets in its server fabric, all within data centers that have themselves been optimized for efficiency using neural networks (Evans & Gao 2016). As well, let’s not forget that most important ingredient in generative AI’s success, Google’s endless supply of money to fuel continuing AI research and development. Instead of viewing Google at imminent risk of disruption, we might be better advised to examine the company’s every step to see what we should prepare to emulate or prepare to protect against.

At a minimum, of course, it’s necessary that we understand that “generative AI” doesn’t narrowly mean the mere generation of text. The popular press is known to misread this, unfortunately, with headlines like “There’s No Such Thing as Artificial Intelligence”, and so too do technology company blogs and reports from financial services companies. When next hearing such pronouncements, it’s important we bear in mind all of gen-AI’s enterprise use cases that are not dependent on LLMs (with all of LLMs’ copyright baggage). Instead we need consider what generative AI really means for our businesses: AI that generates better weather forecasts, traffic flows, architectural designs, manufacturing, power utilization and cybersecurity; AI that generates better drugs to extend the quality of our lives; and even AI that generates better data(!).

We have definitively entered the AI Century. For better or worse, there will be no return to the sleepy, pre-AI days of Business As Usual. To compete, companies of all sizes must understand and grasp the essential machinery of the AI Century: generative AI. There is no future without it.

←

→